BunnyVision

Chapter 2

Fellowship of the Bunny

Who you share your work with is as important as what you do.

The concept of BunnyVision on the bicycle is simple. We intend to illustrate what is behind you in a non-distracting manner and provide useful feedback using real time object detection technologies. The purpose of technology is to give your mind what it needs, when it needs so you can ride freely, and safely. The “Mark-I” prototype system will draw upon many engineering disciplines to achieve the initial product vision.

- Embedded Software Engineering : Everything from C code to writing our own assembler for the NXP MCXN947 EZH/ SmartDMA.

- Electronics Engineering and Printed Circuit Board Design : Power conversion, high-speed memory interfaces, distributed architectures, Fine Pitch BGAs, Packaging and assembly

- Object Detection and Machine Learning: Building our own pipeline for BunnyVision to run on the MCXN947 eIQ Neutron and future variants with extended capabilities.

- Systems Engineering : Constructing a development pipeline so we can prototype, incrementally improve and regression test.

- UI/UX : Presenting data graphically in a way that is purposeful and non-distracting using a bespoke graphics rendering tools.

- Industrial Design and Mechanical Engineering : Purpose built enclosures and functional design ergonomics.

I fundamentally believe that some of the best technology is inseparable from the cultural zeitgeist it exists in. Remove the people, culture and lived experience, and you are left with essentially nothing. I can still remember riding the school bus to my best friend Adrian’s house on a Friday afternoon. Everything about the experience is burned into my memory… What the bus smelled like and what the Orange Crush soda tasted like as we enjoyed hours of Metal Gear and Mike Tyson’s punchout on our 6502 powered Nintendo Entertainment System. This sentiment existed for many people in the 1980’s across many different domains including music and art. If you subtract the time, people and culture, you have boring 8-bit technology.

The reason I am weaving in much of the story behind BunnyVision is for similar reasons. Before you engineer the circuits and software, you need to engineer the team. Combining technology with passion, purpose ,and good people can make for the best products. I certainly get excited about raw technology but the applications, and people that benefit, the tech is an empty shell.

The non-linear path can start where you least expect it.

Finding the right people is a combination of raw effort, time, serendipity, trust and faith. Crossing this chasm is often difficult for those from an engineering background because it requires soft skills and the ability to make decisions on imperfect and sometimes unknowable information. It takes a certain amount of faith, a word not common in engineering vernacular. Once you can build a bridge across this chasm and have the courage to take the 1st steps across, you will find that your life will most certainly change.

In the case of BunnyVision, serendipity was a huge component that was built on efforts that began over a decade prior to the initial concept. In 2010, I gave a seminar at the Freescale Technology Forum on energy systems for robotics (Freescale and NXP Semiconductor merged in December of 2015). After the seminar, I was approached on the exhibition floor by Oscar Rodas and Eduardo Corpeño. Oscar and Eduardo were professors at Universidad Galileo in Guatemala City, Guatemala and wanted to invite me to participate in Foro de Innovación Tecnológica (FIT).

FIT is a yearly event that features seminars, workshops, and conferences for the students of Galileo. Oscar and Eduardo would bring in international guests to offer their students some new perspectives. I must admit that I was initially nervous about the proposition. My only knowledge of Guatemala was where it was on a Map. I knew next to zero Spanish and had never traveled to Central America. My 1st question was “How is the food?”. They responded with large smiles to ensure that I would be well taken care of.

Stepping outside of your comfort zone comes with side effects. For me it resulted in experiencing some of the best pizza and coffee ever and initiated a relationship with good people who I consider life-long friends. Little did I know that this relationship that started in 2011 would help me more than a decade later.

Synchronicity.

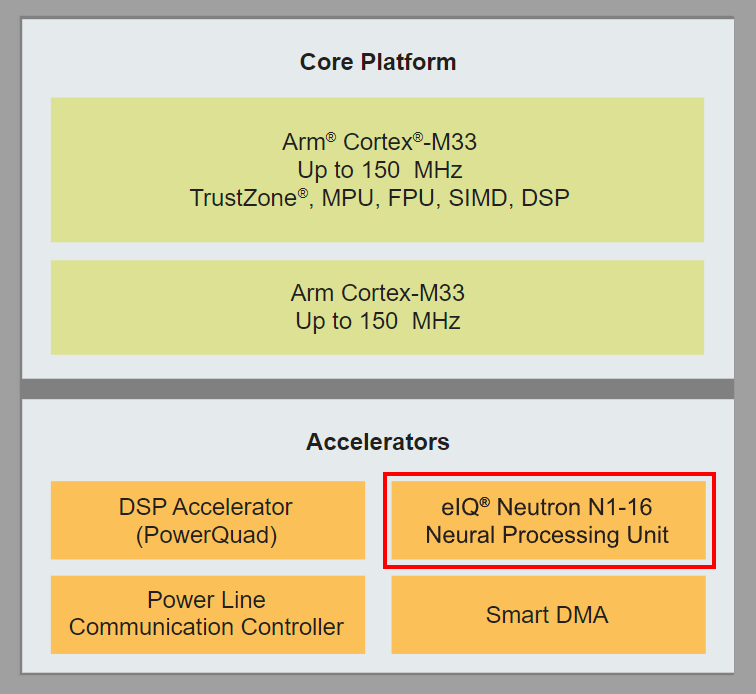

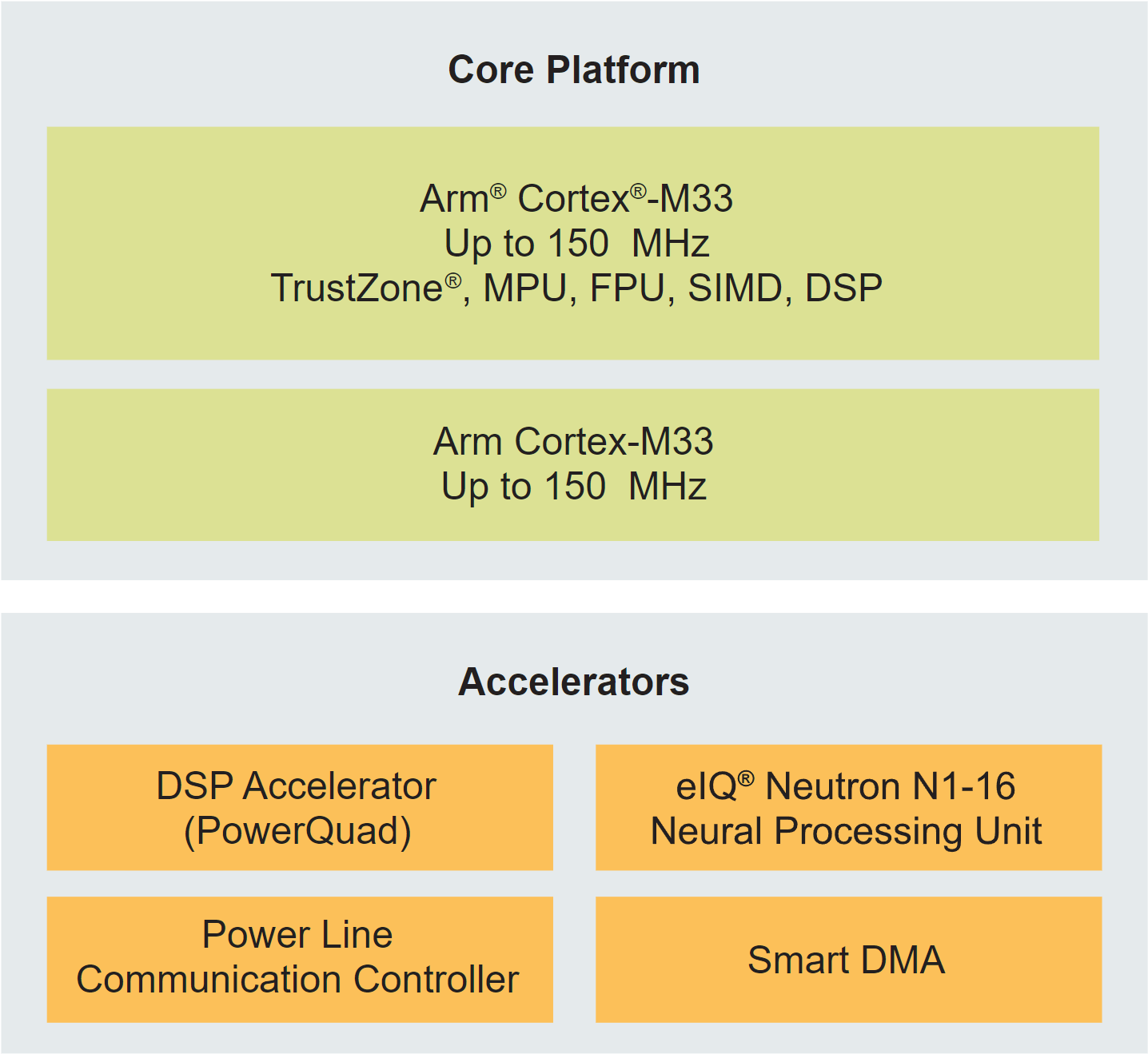

The Mark-I BunnyVision hardware will use NXP MCXN947 microcontroller with NXP eIQ Neutron Neural Processing Unit (NPU)

Using the eIQ Neutron NPU is performed through a toolkit that translates a tensor flow lite model in a processing graph that can be accelerated on the NPU. When I saw an early version of the “face detect” demo for the MCX N947, I realized that I had a good candidate technology for the BunnyVision imaging sensor. It was just enough to implement what I envisioned.

Object detection models are not new. There are a wide range of models and training sets available in the public domain to precisely identify objects in a scene and classify behaviors. However, this use case doesn’t require highly complex models. In fact, quite the opposite. A simple model can solve enough of the problem such that the human brain can do the rest. Many of the object detection samples in the public domain are what I would classify as “toy models”. They are useful to understand the tech, but not well suited for a product let alone a resource constrained, battery powered embedded device.

For BunnyVision, we will be training a purpose/application focused detector that is suitable for our hardware. There is a lot of work involved from collecting training data, to developing the processing pipeline to deploying the model. Future chapters will be diving into the model development.

"If you want to go fast, go alone; but if you want to go far, go together."

The surface area of the technology components for BunnyVision is quite large, and I knew I needed some help. In the Fall of 2023, things were getting busy at Wavenumber LLC. I needed some help with a new project unrelated to BunnyVision and work was piling up. Serendipity and synchronicity once again delivered. Building networks of people is extremely important. You simply don’t know what you don’t know, and a large, diverse network can help you when you least expect it.

For this other project (a story for another time), I needed to find some one who is aligned to the true full stack model and that I could mentor. Because of the Covid 19 pandemic, I had not traveled to Guatemala for some time. However, I reconnected with Oscar and Eduardo to see if they could offer assistance with my current dilemma.

I mentioned the various projects and mentioned that I really needed a padawan with a high Midi-chlorian count. Not only did Eduardo have an immediate recommendation for my immediate project needs, Oscar reminded me of someone else from one of my original trips to Guatemala over a decade prior who could help with BunnyVision.

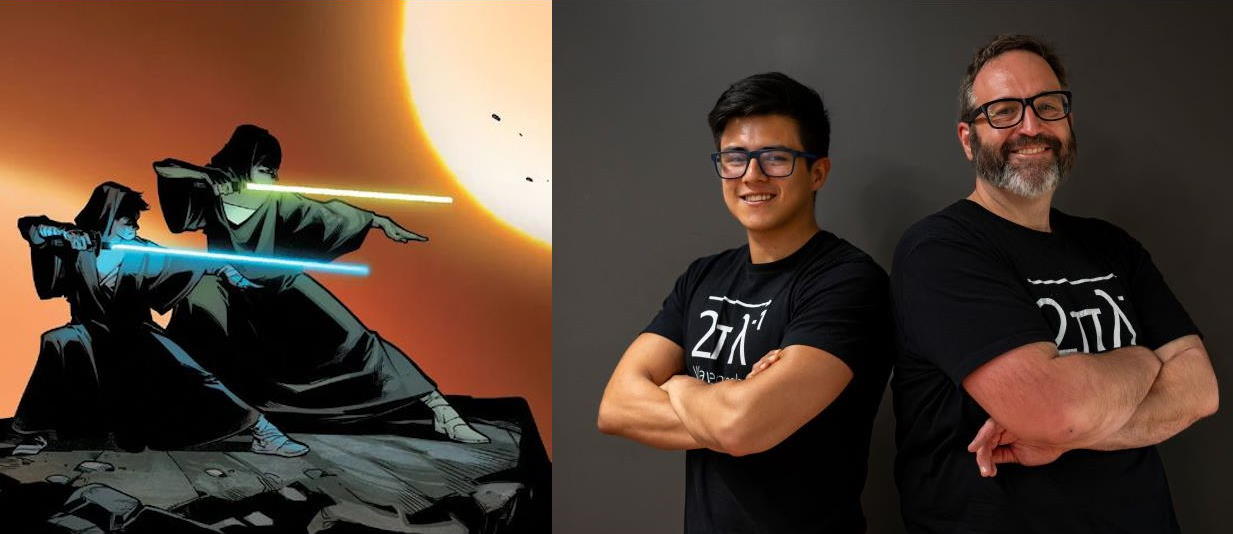

Jerry Palacios was one of Eduardo’s best students and he was looking for opportunities. He joined Wavenumber in the Fall of 2023 to start his path on becoming an Embedded Full Stack Master.

My best offer, both as a potential mentor and potential employer, was this:

“The path will be filled with many challenges that will vanquish the ordinary person. I can’t tell you that the fight will be easy. But I can promise that you will never fight alone.”

There were times in my life when I really needed a mentor and truly believe that one needs to have a lifelong attitude of embracing the role of being both teacher and student. My desire is simple. I hope that he, and anyone else that I have an opportunity to serve, will become better than me at everything. I can’t imagine a better outcome.

One of the foundational components of Wavenumber is to build an environment where teaching, learning and mentorship is baked into everything. "Putting out your own shingle" is an impossibly difficult task. At times it seems like an the most difficult way to earn a living that no sane person would choose. However, the values of teaching, learning and mentorship is worth fighting for. It is why "Create, Communicate and Serve" is what we say we do.

At the same time I was meeting Jerry, I discovered that another former student at Galileo I had interacted with over a decade ago was doing work in areas that met the needs of BunnyVision. In 2012 and 2013, I brought the Freescale Cup (now NXP Cup) to FIT at Galileo. The Freescale(NXP) cup was an autonomous car competition. Each team was given an identical chassis, motors, and battery. Teams had to build electronics and software that could navigate a track in the fastest time using simple IR LED/Detector based vision system. At the time, we had been using the FRDM-KL25Z based upon the Kinetis KL25Z Cortex M0+ microcontroller.

The student who won the competition that year, Julio Fajardo, was clearly on the path to becoming a great “true full stack” engineer and researcher. I had not communicated with Julio in a while, and Oscar had mentioned that he had been doing a significant amount of work in areas of robotics and vision systems. Julio is the sub-director at the Turing Lab at Galileo working which is directed by Ali Lemus. Through the reconnection with Oscar and Eduardo, my past relationships with Julio and Ali came at just the right time.

I presented them with the BunnyVision concept, and it was clear we had the right people to help with getting the object detection system up and running. To help bring BunnyVision to Life, three researcher from Galileo Turing lab are bringing their expertise and hard work: Juan Pablo Barrientos Linares, Guillermo Maldonado, and Jabes Guerra. To help manage effort, we are supported by Carlos Aguilar. The Team at Turing will be diving deep into developing the object detection model, working on the training sets, translating to the NPU in the MCXN947 as well as working on the graphics algorithms to present the scene on the BunnyVision reflective display.

One team, geographically distributed.

In March of 2024, I traveled to Guatemala City to spend 10 days working with the team and enjoy Central America. We had been collaborating remotely, but I felt it was important to meet in person to further develop the relationship. My last visit had been in 2017 and it was time to reconnect in person. While we have the tools to work together from any place in the world, nothing beats working in the same physical space. I also needed to meet Jerry in person so we could collaborate on another project.

With a complex effort like BunnyVision, it is important to have live, in-person discussions. It also helps set the tone for future online collaboration. There was a lot in my head regarding the “how”. Getting this in front of everyone else is key to success. The first order of business was to layout the system architecture that allows us to break down the problem. We even managed to almost get everyone in a picture.

Unfortunately, Juan Pablo (JP) was not present at the time of this photo.

Nothing beats enjoying the company of good people and doing some with the FRDM-MCXN947 development board

And yes, the food was great. Ali Lemus, the director of the Turing lab and professor at Galileo, took me to La cocina de la Señora Pu. This restaurant is a modern interpretation of Mayan Cuisine.

I normally don’t post pictures of my food, but experiencing food and drink using traditional Cacao was quite a treat. In additional to my wondering time at Señora Pu's, I did enjoy the pizza as well. When I mention my travels to Guatemala, I say that the best pizza I have ever had is in Guatemala City. While it is certainly good, what makes it even better is people to share it with.

Not directly related to BunnyVision, but I also reconnected with another interesting person during my trip.

During one of our work sessions, Julio brought Dr. Andrea Lara for a visit. Andrea had Attended some of my 1st FIT sessions on Embedded Systems and Acoustics (the Science of sound) way back in 2012. Dr. Andrea Lara is now the Head of Biomedical Engineering Department at Galileo University. Her research focuses on medical image analysis and deep learning applied to cardiac imaging and works with Julio and the other researchers at the Turing Lab. The BiomedLab is focused on the design and development of low-cost technologies and ML-based healthcare solutions. It was truly amazing and awesome to see what was going on and how things had changed over the past decade.

Another team member back in the USA.

In the Fall of 2023 I was introduced to Nathan Fikes via Bil Herd ,(one of the engineers instrumental for all of the awesome hardware that came out of Commodore).

Nathan is our 3d modeling / mechanical Ninja. He is currently studying Mechanical engineering at Virginia Tech. The concept model / animation shown in chapter 1 was done completely by Nathan in Blender. Everything down to the links in the bicycle chain. T his was yet another connection made by a chain of unlikely events that has been yielding awesome results.

The Mark-I system architecture.

The objective of the Mark-I system is to get hardware mounted on the bike to start performing its target function. The lived experience of BunnyVision the prototype system will help drive functional improvements in future iterations. Engineers can easily find themselves in a morass of decision-making fatigue that comes from a desire to make the “best” design choices at the outset. The reality of any effort is that you need to make decisions in a changing environment with imperfect information. The hardest part is getting to “something” from zero. Once a system is stood up, you can always improve and replace components.

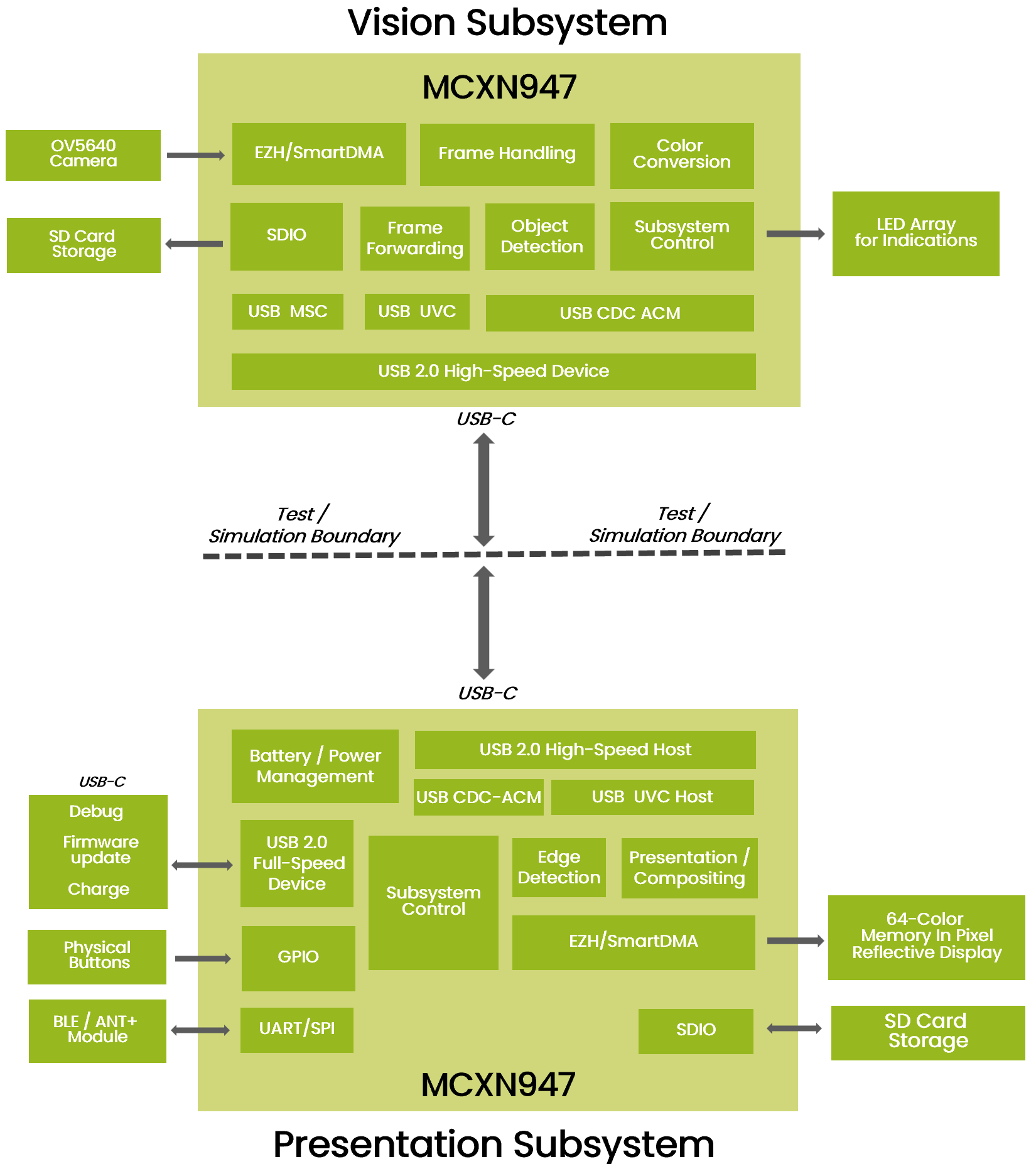

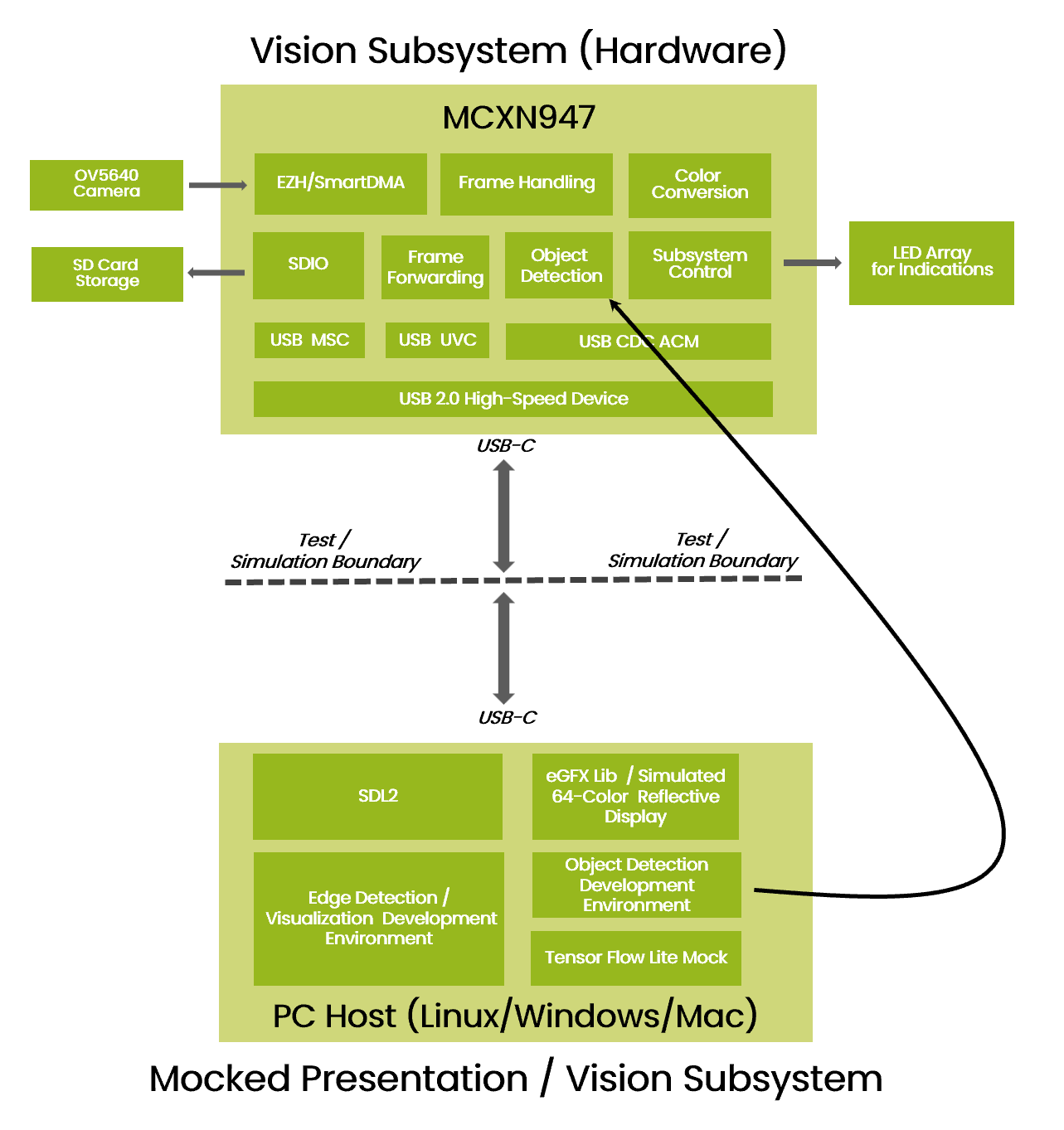

We drew clear boundaries at the outset to get a version of system mounted on a bike to learn and address the problems we don’t yet know about. The architecture of the Mark I follows how the concept render shows the physical prototype. There are two main components, the rear mounted camera/vision system, and the front mounted display/presentation system.

The Vision (camera) and Presentation (display) subsystems are physically and logically separated.

The physical separation of the two subsystems yields two side effects.

- There will need to be processing resources at both ends. We will use an MCXN947 in both subsystems.

- Since we are using the same MCU on both ends, we can make choices about where certain data processing functions need to live but change them later.

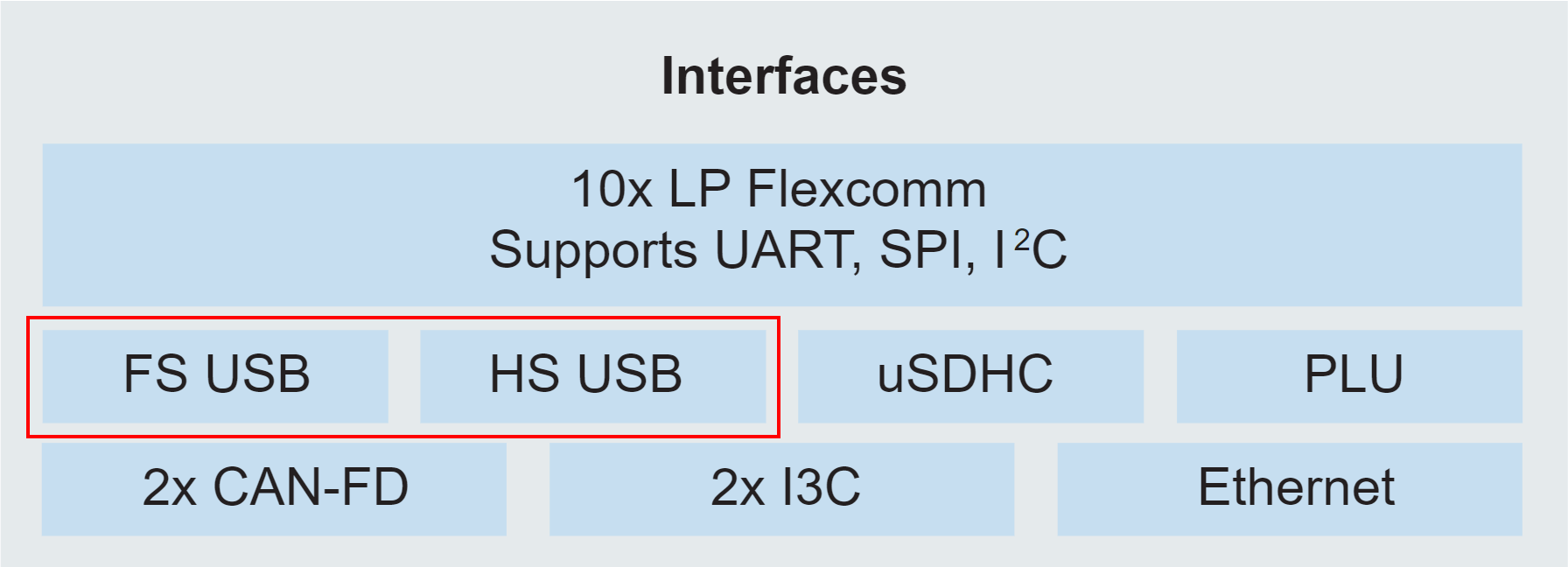

To connect the two sub-system, the Mark-I prototype will use a High-speed USB data link via a USB-C cable. The MCXN947 has several connectivity options. Built into the are two USB interfaces, one high-speed and the other full-speed.

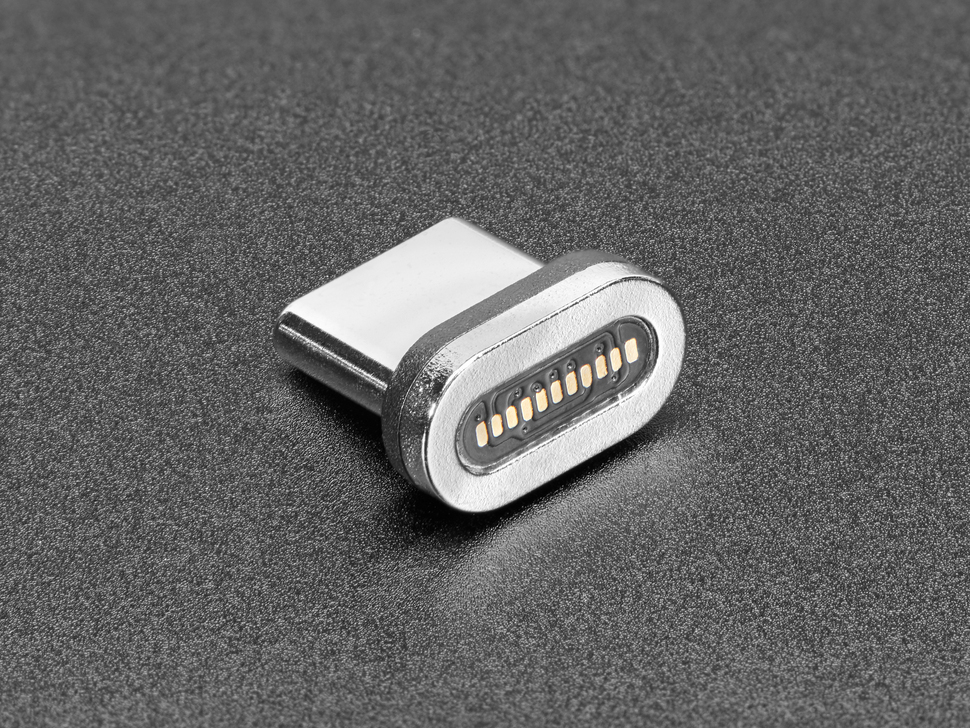

USB-C cabling works well for the prototype purpose. The expected data rates, length and power delivery options are well suited to the cabling thought the bike chassis. We expect to be removing the devices often to charge, update and debug. This is where magnetic ends can be useful for prototyping.

In addition to the ubiquity of USB-C cabling, the USB data transport option was chosen for another reason: predefined data transport models that are well suited to the BunnyVision application. Presenting raw camera data over a USB Video Class interface (UVC) means that we can be debugging camera related issues on a PC with minimal software development work on the host side. With UVC, we can capture, process, and visualize the exact same data that the embedded MCU will see in a PC environment. We can then composite the USB interface with Mass Storage Class interface (MSC) and Communications Class Interface (CDC). The CDC interface can be used for transmitting object inference information, implementing a debug terminal/shell and enabling and simple controls for the LED indications (turn signals).

The Vision Subsystem will have an SD card interface for recording training data in-situ. The USB MSC interface will make for simplified extract of the raw data. It also helps that Jerry Palacios / Wavenumber LLC helped get the MCXN947 added to the open source TinyUSB Stack.

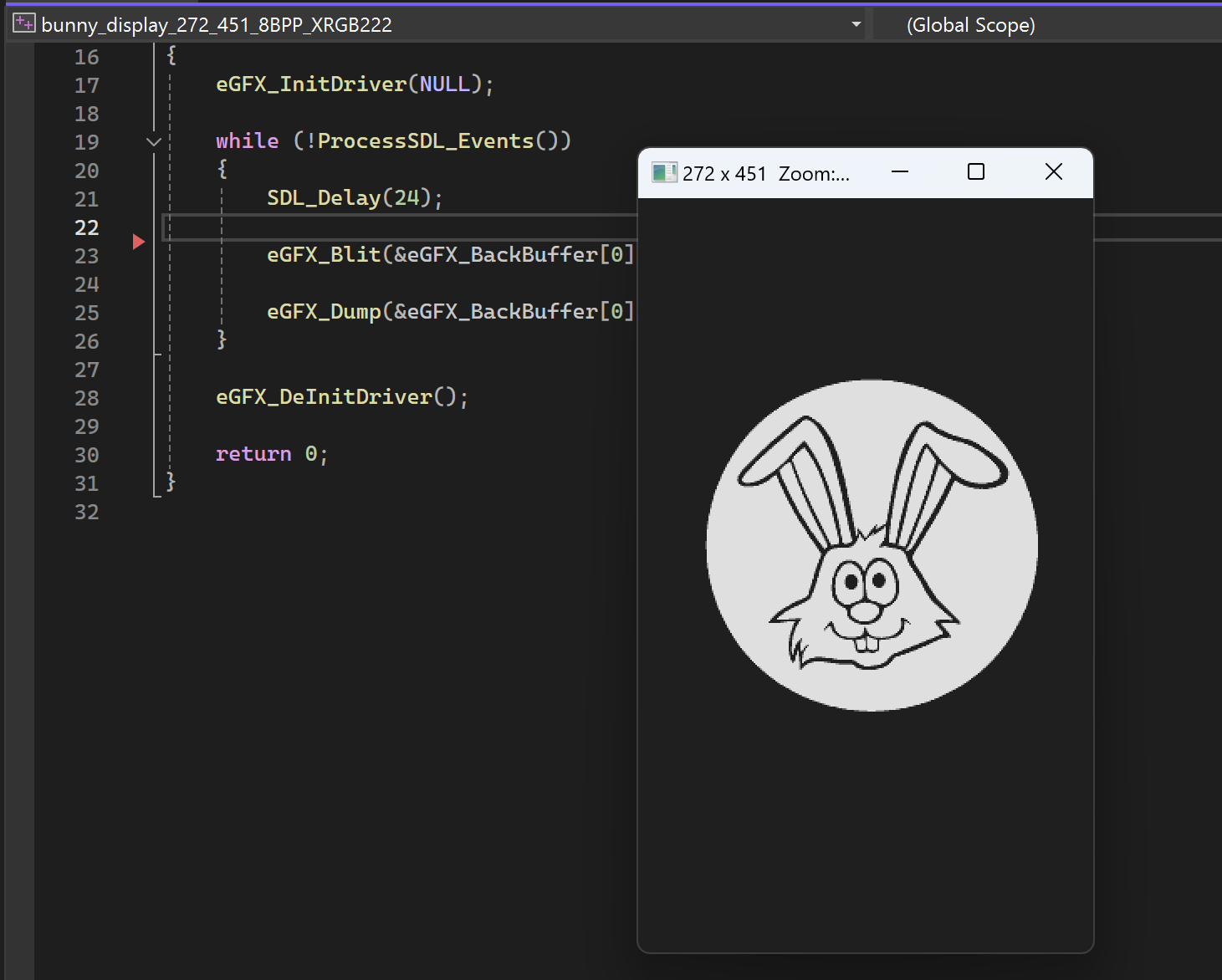

Clearly defined boundaries and interfaces allow subsystem development to occur in tandem. It also enables one end to be simulated to make testing and development simpler. The presentation subsystem will be mocked with an SDL2 based simulator running on a host PC.

The build environment will be CMake/ninja based. The presentation subsystem can be completely mocked using a display simulation framework based on SDL2 and an in-house graphics library. The simulated display environment matches the display resolution and color space. Early on, we can know how things will look before the hardware is complete.

This also applies to the object detection code itself. The NXP eIQ toolkit translates tensorflow lite code into an equivalent processing graph that is deployable on the MCX NPU. We can develop and test the algorithm in a PC environment and then easily move to the embedded target. In future chapters, we will be diving into the details of the processing pipeline.

Even though MCXN947 runs at 150MHz, we have several computation resources ready to achieve our end vision. It might not seem like much to those accustomed to high end processing systems, but we have the team and talent to make this work on MCX.

A few points that might not be immediately obvious.

- The e-IQ Neutron N-16 NPU can accelerate any tensor flow lite graph. With some creativity it can be used for general purpose image processing. For example, the 1st stage of an edge detector is a simple 2d convolution with a gaussian kernel. The NPU is tuned for 8-bit operations, which is perfect for many CV Algorithms.

- The SmartDMA accelerator is a fully programmable bit slice processor. It is used to synthesize the camera interface but will also be used for some color space conversion. We evem wrote our own on platform assembler (runs on the CM33) called "BunnyBuild"

- The “Power Line Communication Controller” is actually a 32-bit programmable DSP known as the CoolFlux. We can choose to offload some of the computer vision tasks to this resource

- The MCX N947 is dual core M33. One of these cores could be tasked for system level supervision and the other for integer/fixed point 2D signal processing

- The PowerQuad is a dedicated coprocessor for operations such as matrix multiple, FFT’s and one-dimensional convolution. With some creativity, it can be purposed to help in the pipeline.

The distribution of processing tasks will be the subject of future chapters. There will be a lot of good things to talk about.

Moving to the hardware design and the good things to come.

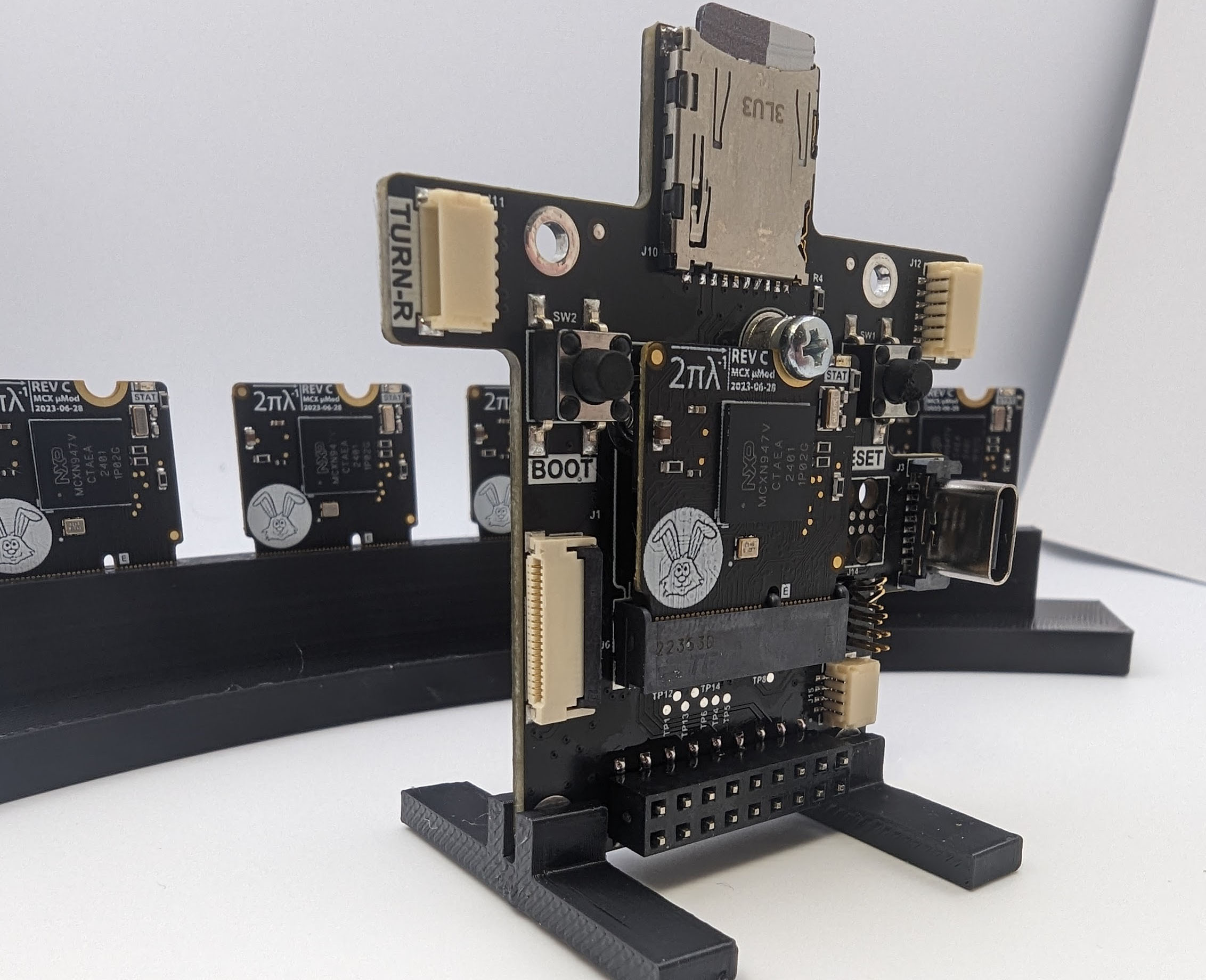

The prototype hardware for the vision subsystem is already complete. In the next chapter we will be diving into the “BunnyCam”. The BunnyCam is powered by prototyping board known as the “BunnyBrain”. Early in development, all we had were a few samples of the MCXN947 in the VFBGA184 package. To facilitate prototype, we built breakout board based upon the SparkFun MicroMod format.

In the next chapter we are going to dive into specifics of the VFBGA package, how to design with the MCXN947 and one of the coolest interfaces on the part : the EZH/SmartDMA. This peripheral is a fully programmable IO coprocessor that can be used for implementing custom /dedicate IO. It is *really* cool. Wavenumber (between Jerry and Myself) has already built and tested a assembler and mini-linker for the SmartDMA that can run on the MCX CM33 core.

Nothing is more fun than a little assembly language. BunnyBuild enables the ARM Cortex M33 to build SmartDMA programs, link variables between the cores, locate smart DMA programs arbitrarily in RAM and swap smart DMA programs on the fly.

The team is built, the architecture in place and hardware on hand. Lots more in store for BunnyVision in 2024. If you are able, come to Guatemala and have coffee in front of one of the Volcanoes. The landscape is beautiful.

I do need to work on my Spanish before my next trip. My attempt to get a “Latte with an extra shot” didn’t work out as planned.